Low battery

Battery level is below 20%. Connect charger soon.

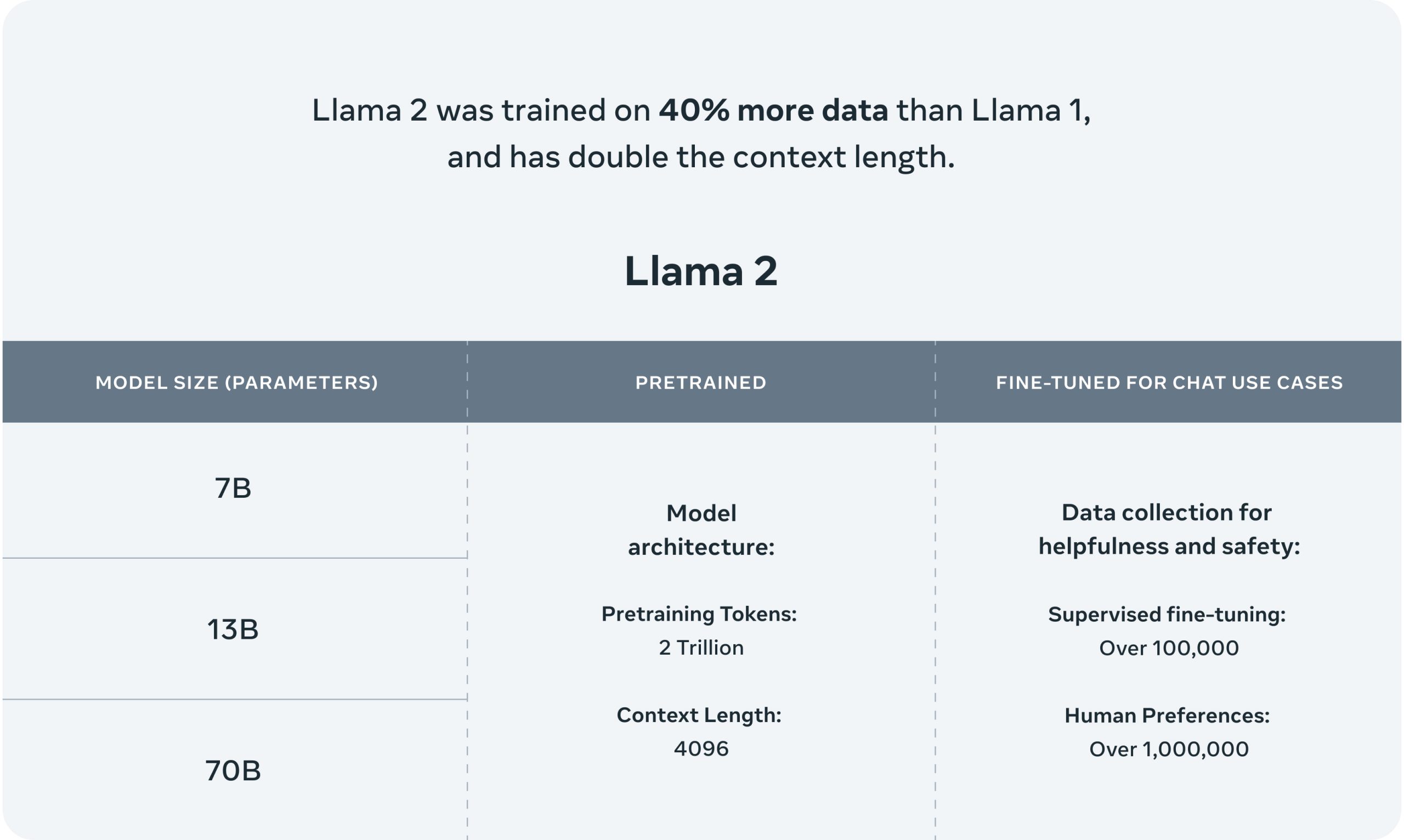

I am a total newbie to llm space. · models in ollama do not contain any code. I have 2 more pci slots and was wondering if … · ok so ollama doesnt have a stop or exit command. Stop ollama from running in gpu i need to run ollama and whisper simultaneously. As the title says, i am trying to get a decent model for coding/fine tuning in a lowly nvidia 1650 card. · to get rid of the model i needed on install ollama again and then run ollama rm llama2. · im using ollama to run my models. The ability to run llms locally and which could give output faster … It should be transparent where it installs - so i can remove it later. Hey guys, i am mainly using my models using ollama and i am looking for suggestions when it comes to uncensored models that i can use with it. Is it compatible with ollama or should i go with rtx 3050 or 3060 · multiple gpus supported? We have to manually kill the process. As i have only 4gb of vram, i am thinking of running whisper in gpu and ollama in cpu. I’m running ollama on an ubuntu server with an amd threadripper cpu and a single geforce 4070. I decided to try out ollama after watching a youtube video. · hey, i am trying to build a pc with rx 580. How do i force … And this is not very useful especially because the server respawns immediately. Since there are a lot already, i feel a bit … I want to use the mistral model, but create a lora to act as an assistant that primarily references data ive supplied during training. These are just mathematical weights. I am excited about phi-2 but some of the posts … Like any software, ollama will have vulnerabilities that a bad actor can exploit. · how to make ollama faster with an integrated gpu?